(This is chapter 8 of Virtual Body Language). Extra images have been added for this post. An earlier variation of this blog post was published here.)

The brain can rewire its neurons, growing new connections, well into old age. Marked recovery is often possible even in stroke victims, porn addicts, and obsessive-compulsive sufferers, several years after the onset of their conditions. Neural Plasticity is now proven to exist in adults, not just in babies and young children going through the critical learning stages of life. This subject has been popularized with books like The Brain that Changes Itself (Doidge 2007).

The brain can rewire its neurons, growing new connections, well into old age. Marked recovery is often possible even in stroke victims, porn addicts, and obsessive-compulsive sufferers, several years after the onset of their conditions. Neural Plasticity is now proven to exist in adults, not just in babies and young children going through the critical learning stages of life. This subject has been popularized with books like The Brain that Changes Itself (Doidge 2007).

Like language grammar, music theory, and dance, visual language can be developed throughout life. As visual stimuli are taken in repeatedly, our brains reinforce neural networks for recognizing and interpreting them.

Some aspects of visual language are easily learned, and some may even be instinctual. Biophilia is the human aesthetic appreciation of biological form and all living systems (Kellert and Wilson 1993). The flip side of aesthetic biophilia is a readiness to learn disgust (of feces and certain smells) or fear (of large insects or snakes, for instance). Easy recognition of the shapes of branching trees, puffy clouds, flowing water, plump fruit, and subtle differences in the shades of green and red, have laid a foundation for human aesthetic response in all things, natural or manufactured. Our biophilia is the foundation of much of our visual design, even in areas as urban as abstract painting, web page layout, and office furniture design.

Some aspects of visual language are easily learned, and some may even be instinctual. Biophilia is the human aesthetic appreciation of biological form and all living systems (Kellert and Wilson 1993). The flip side of aesthetic biophilia is a readiness to learn disgust (of feces and certain smells) or fear (of large insects or snakes, for instance). Easy recognition of the shapes of branching trees, puffy clouds, flowing water, plump fruit, and subtle differences in the shades of green and red, have laid a foundation for human aesthetic response in all things, natural or manufactured. Our biophilia is the foundation of much of our visual design, even in areas as urban as abstract painting, web page layout, and office furniture design.

The Face

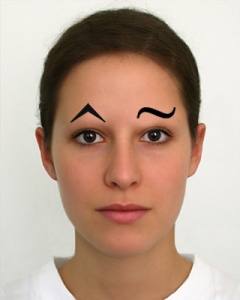

We all have visual language skills that make us especially sensitive to eyebrow motion, eye contact, head orientation, and mouth shape. Our sensitivity to facial expression may even influence other, more abstract forms of visual language, making us responsive to some visual signals more than others—because those face-specific sensitivities gave us an evolutionary advantage as a species so dependent on social signaling. This may have been reinforced by sexual selection.

We all have visual language skills that make us especially sensitive to eyebrow motion, eye contact, head orientation, and mouth shape. Our sensitivity to facial expression may even influence other, more abstract forms of visual language, making us responsive to some visual signals more than others—because those face-specific sensitivities gave us an evolutionary advantage as a species so dependent on social signaling. This may have been reinforced by sexual selection.

Even visual features as small as the pupil of an eye contribute to the emotional reading of a face—usually unconsciously. Perhaps the evolved sensitivity to small black dots as information-givers contributed to our subsequent invention of small-yet-critical elements in typographical alphabets.

We see faces in clouds, trees, and spaghetti. Donald Norman wrote a book called, Turn Signals are the Facial Expressions of Automobiles (1992). Recent studies using fMRI scans show that car aficionados use the same brain modules to recognize cars that people in general use to recognize faces (Gauthier et al. 2003).

Something about the face appears to be baked into the brain.

Something about the face appears to be baked into the brain.

Dog Smiling

We smile with our faces. Dogs smile with their tails. The next time you see a Doberman Pinscher with his ears clipped and his tail removed (docked), imagine yourself with your smile muscles frozen dead and your eyebrows shaved off.

Like humans perceiving human smiles, it is likely that the canine brain has neural wiring highly tuned to recognize and process a certain kind of visual energy: an oscillating motion of a linear shape occurring near the backside of another dog. The photoreceptors go to work doing edge detection and motion detection so they can send efficient signals to the brain for rapid space-time pattern recognition.

Tail wagging is apparently a learned behavior: puppies don’t wag until they are one or two months old. Recent findings in dog tail wagging show that a wagger will favor the right side as a result of feeling fundamentally positive about something, and will favor the left side when there are negative overtones in feeling (Quaranta et al. 2007). This is not something humans have commonly known until recently. Could it be that left-right wagging asymmetry has always been a subtle part of canine-to-canine body language?

I often watch intently as my terrier mix, Higgs, encounters a new dog in the park whom he has never met. The two dogs approach each other—often moving very slowly and cautiously. If the other dog has its tail down between its legs and its ears and head held down, and is frequently glancing to the side to avert eye contact, this generally means it is afraid, shy, or intimidated. If its tail is sticking straight up (and not wagging) and if its ears are perked up and the hair on the back is standing on end, it could mean trouble. But if the new stranger is wagging its tail, this is a pretty good sign that things are going to be just fine. Then a new phase of dog body language takes over. If Higgs is in a playful mood, he’ll start a series of quick motions, squatting his chest down to assume the “play bow”, jumping and stopping suddenly, and watching the other dog closely (probably to keep an eye on the tail, among other things). If Higgs is successful, the other dog will accept his invitation, and they will start running around the park, chasing each other, and having a grand old time. It is such a joy to watch dogs at play.

I often watch intently as my terrier mix, Higgs, encounters a new dog in the park whom he has never met. The two dogs approach each other—often moving very slowly and cautiously. If the other dog has its tail down between its legs and its ears and head held down, and is frequently glancing to the side to avert eye contact, this generally means it is afraid, shy, or intimidated. If its tail is sticking straight up (and not wagging) and if its ears are perked up and the hair on the back is standing on end, it could mean trouble. But if the new stranger is wagging its tail, this is a pretty good sign that things are going to be just fine. Then a new phase of dog body language takes over. If Higgs is in a playful mood, he’ll start a series of quick motions, squatting his chest down to assume the “play bow”, jumping and stopping suddenly, and watching the other dog closely (probably to keep an eye on the tail, among other things). If Higgs is successful, the other dog will accept his invitation, and they will start running around the park, chasing each other, and having a grand old time. It is such a joy to watch dogs at play.

So here is a question: assuming dogs have an instinctive—or easily-learned—ability to process the body language of other dogs, like wagging tails, can the same occur in humans for processing canine body language? Did I have to learn to read canine body language from scratch, or was I was born with this ability? I am after all a member of a species that has been co-evolving with canines for more than ten thousand years, sometimes in deeply symbiotic relationships. So, perhaps I, Homo Sapiens, already have a biophilic predisposition to canine body language.

Recent studies in using dogs as therapy for helping children with autism have shown remarkable results. These children become more socially and emotionally connected. According to autistic author Temple Grandin, this is because animals, like people with autism, do not experience ambivalent emotion; their emotions are pure and direct (Grandin, Johnson 2005). Perhaps canines helped to modulate, buffer, and filter human emotions throughout our symbiotic evolution. They may have helped to offset a tendency towards neurosis and cognitive chaos.

Recent studies in using dogs as therapy for helping children with autism have shown remarkable results. These children become more socially and emotionally connected. According to autistic author Temple Grandin, this is because animals, like people with autism, do not experience ambivalent emotion; their emotions are pure and direct (Grandin, Johnson 2005). Perhaps canines helped to modulate, buffer, and filter human emotions throughout our symbiotic evolution. They may have helped to offset a tendency towards neurosis and cognitive chaos.

…

Vestigial Response

Having a dog in the family provides a continual reminder of my affiliation with canines, not just as companions, but as Earthly relatives. On a few rare occasions while sitting quietly at home working on something, I remember hearing an unfamiliar noise somewhere in the house. But before I even knew consciously that I was hearing anything, I felt a vague tug at my ears; it was not an entirely comfortable feeling. This may have happened at other times, but I probably didn’t notice.

Having a dog in the family provides a continual reminder of my affiliation with canines, not just as companions, but as Earthly relatives. On a few rare occasions while sitting quietly at home working on something, I remember hearing an unfamiliar noise somewhere in the house. But before I even knew consciously that I was hearing anything, I felt a vague tug at my ears; it was not an entirely comfortable feeling. This may have happened at other times, but I probably didn’t notice.

I later learned that this is a leftover vestigial response that we inherited from our ancestors.

The ability for some people to wiggle their ears is due to muscles that are basically useless in humans, but were once used by our ancestors to aim their ears toward a sound. Remembering the feeling of that tug on my ears gives me a feeling of connection to my ancestors’ physical experiences.

In a moment we will consider how these primal vestiges might be coming back into modern currency. But I’m still in a meandering, storytelling, pipe-smoking kind of mood, so hang with me just a bit longer and then we’ll get back to the subject of avatars.

This vestigial response is called ear perking. It is shared by many of our living mammalian relatives, including cats. I remember once hanging out and playing mind-games with a cat. I was sitting on a couch, and the cat was sitting in the middle of the floor, looking away, pretending to ignore me (but of course—it’s a cat).

I was trying to get the cat to look at me or to acknowledge me in some way. I called its name, I hissed, clicked my tongue, and clapped my hands. Nothing. I scratched the upholstery on the couch. Nothing. Then I tore a small piece of paper from my notebook, crumpled it into a tiny ball, and discreetly tossed it onto the floor, behind the cat, outside of its field of view. The tiny sound of the crumpled ball of paper falling to the floor caused one of the cat’s ears to swivel around and aim towards the sound. I jumped up and yelled, “Hah—got you!” The cat’s higher brain quickly tugged at its ear, pulling it back into place, and the cat continued to serenely look off into the distance, as if nothing had ever happened.

Cat body language is harder to read than dog body language. Perhaps that’s by design (I mean…evolution). Dogs don’t seem to have the same talents of reserve and constraint. Dog expressions just flop out in front of you. And their vocabulary is quite rich. Most world languages have several dog-related words and phrases. We easily learn to recognize the body language of dogs, and even less familiar social animals. Certain forms of body language are processed by the brain more easily than others. A baby learns to respond to its mother’s smile very soon after birth. Learning to read a dog’s tail motions may not be so instinctive, but the plastic brain of Homo Sapiens is ready to adapt. Learning to read the signs that your pet turtle is hungry would be much harder (I assume). At the extreme: learning to read the signals from an enemy character in a complicated computer game may be a skill only for a select few elite players.

This I’m sure of: if we had tails, we’d be wagging them for our dogs when they are being good (and for that matter, we’d also be wagging them for each other :D ). It would not be easy to add a tail to the base of a human spine and wire up the nerves and muscles. But if it could be done, our brains would easily and happily adapt, employing some appropriate system of neurons to the purpose of wagging the tail—perhaps re-adapting a system of neurons normally dedicated to doing The Twist. While it may not be easy to adapt our bodies to acquire such organs of expression, our brains can easily adapt. And that’s where avatars come in.

This I’m sure of: if we had tails, we’d be wagging them for our dogs when they are being good (and for that matter, we’d also be wagging them for each other :D ). It would not be easy to add a tail to the base of a human spine and wire up the nerves and muscles. But if it could be done, our brains would easily and happily adapt, employing some appropriate system of neurons to the purpose of wagging the tail—perhaps re-adapting a system of neurons normally dedicated to doing The Twist. While it may not be easy to adapt our bodies to acquire such organs of expression, our brains can easily adapt. And that’s where avatars come in.

Furries

The Furry Species has proliferated in Second Life. Furry Fandom is a subculture featuring fictional anthropomorphic animal characters with human personalities and human-like attributes. Furry fandom already had a virtual head start—people were role-playing with their online “fursonas” before Second Life existed (witness FurryMUCK, a user-extendable online text-based role-playing game started in 1990). Furries have animal heads, tails, and other such features.

The Furry Species has proliferated in Second Life. Furry Fandom is a subculture featuring fictional anthropomorphic animal characters with human personalities and human-like attributes. Furry fandom already had a virtual head start—people were role-playing with their online “fursonas” before Second Life existed (witness FurryMUCK, a user-extendable online text-based role-playing game started in 1990). Furries have animal heads, tails, and other such features.

While many Second Life avatars have true animal forms (such as the “ferals” and the “tinies”), many are anthropomorphic: walking upright with human postures and gaits. This anthropomorphism has many creative expressions, ranging from quaint and cute to mythical, scary, and kinky.

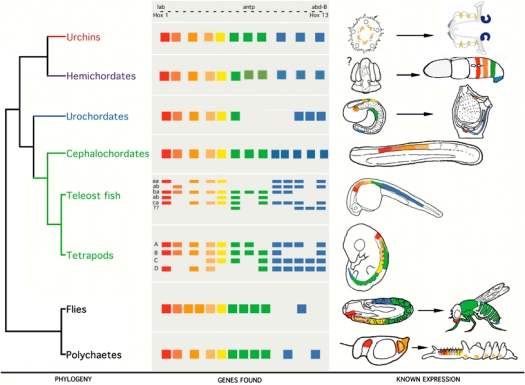

Furry anthropomorphism in Second Life is appropriate in one sense: there is no way to directly change the Second Life avatar into a non-human form. The underlying avatar skeleton software is based solely on the upright-walking human form. I know this fact in an intimate way, because I spent several months digging into the code in an attempt to reconstitute the avatar skeleton to allow open-ended morphologies (quadrupeds, etc.) This proved to be difficult. And no surprise: the humanoid avatar morphology code, and all its associated animations—procedural and otherwise—had been in place for several years. It serves a similar purpose to a group of genes in our DNA called Hox genes.

They are baked deep into our genetic structure, and are critical to the formation of a body plan. Hox genes specify the overall structure of the body and are critical in embryonic development when the segmentation and placement of limbs and other body parts are first established. After struggling to override the effects of the Second Life “avatar Hox genes”, I concluded that I could not do this on my own. It was a strategic surgical process that many core Linden Lab engineers would have to perform. Evolution in virtual worlds happens fast. It’s hard to go back to an earlier stage and start over.

Despite the anthropomorphism of the Second Life avatar (or perhaps because of this constraint), the Linden scripting language (LSL) and other customizing abilities have provided a means for some remarkably creative workarounds, including packing the avatar geometry into a small compact form called a “meatball”, and then surrounding it with a custom 3D object, such as a robot or a dragon or some abstract form, complete with responsive animations and particle system effects.

Perhaps furry residents prefer having this constraint of anthropomorphism; it fits with their nature as hybrids. Some furry residents have customized tail animations and use them to express mood and intent. I wouldn’t be surprised if those who have been furries for many years have dreams in which they are living and expressing in their Furry bodies—communicating with tails, ears, and all. Most would agree that customizing a Furry with a wagging-tail animation is a lot easier than undergoing surgery to attach a physical tail.

Perhaps furry residents prefer having this constraint of anthropomorphism; it fits with their nature as hybrids. Some furry residents have customized tail animations and use them to express mood and intent. I wouldn’t be surprised if those who have been furries for many years have dreams in which they are living and expressing in their Furry bodies—communicating with tails, ears, and all. Most would agree that customizing a Furry with a wagging-tail animation is a lot easier than undergoing surgery to attach a physical tail.

But as far as the brain is concerned, it may not make a difference.

Where Does my Virtual Body Live?

Virtual reality is not manifest in computer chips, computer screens, headsets, keyboards, mice, joysticks, or head-mounted displays. Nor does it live in running software. Virtual reality manifests in the brain, and in the collective brains of societies. The blurring of real and virtual experiences is a theme that Jeremy Bailenson and his team at Stanford’s Virtual Human Interaction Lab have been researching.

Virtual environments are now being used for research in social sciences, as well as for the treatment of many brain disorders. An amputee who has suffered from phantom pain for years can be cured of the pain through a disciplined and focused regimen of rewiring his or her body image to effectively “amputate” the phantom limb. Immersive virtual reality has been used recently to treat this problem (Murray et al. 2007). Previous techniques using physical mirrors have been replaced with sophisticated simulations that allow more controlled settings and adjustments. When the brain’s body image gets tweaked away from reality too far, psychological problems ensue, such as anorexia. Having so many super-thin sex-symbol avatars may not be helping the situation. On the other hand, virtual reality is being used in research to treat this and other body image-related disorders.

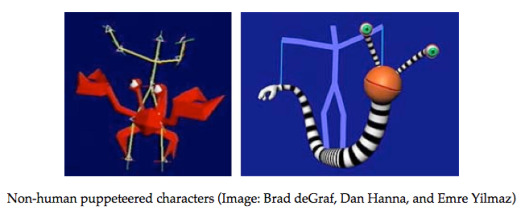

A creative kind of short-term body image shifting is nothing new to animators, actors, and puppeteers, who routinely tweak their own body images in order to express like ostriches or hummingbirds or dogs. When I was working for Brad deGraf, one of the early pioneers in real-time character animation, I would frequent the offices of Protozoa, a game company he founded in the mid-90s.

A creative kind of short-term body image shifting is nothing new to animators, actors, and puppeteers, who routinely tweak their own body images in order to express like ostriches or hummingbirds or dogs. When I was working for Brad deGraf, one of the early pioneers in real-time character animation, I would frequent the offices of Protozoa, a game company he founded in the mid-90s.

I was hired to develop a tool for modeling 3D trees which were to be used in a game called “Squeezils”, featuring animals that scurry among the branches. I remember the first time I went to Protozoa. I was waiting in the lobby to meet Brad, and I noticed a video monitor at the other end of the room. An animation was playing that had a couple of 3D characters milling about. One of them was a crab-like cartoon character with huge claws, and the other was a Big Bird-like character with a very long neck. After gazing at these characters for a while, it occurred to me that both of these characters were moving in coordination—as if they were both being puppeteered by the same person. My curiosity got the best of me and I started wandering around the offices, looking for the puppet master. I peeked around the corner. In the next room were a couple of motion capture experts, testing their hardware. On the stage was a guy wearing motion capture gear. When his arms moved, so did the huge claws of the crab—and so did the wimpy wings of the tall bird. When his head moved, the eyes of the crab looked around, and the bird’s head moved around on top of its long neck.

Brad deGraf and puppeteer/animator Emre Yilmaz call this “…performance animation…a new kind of jazz. Also known as digital puppetry…it brings characters to life, i.e. ‘animates’ them, through real-time control of the three-dimensional computer renderings, enabled by fast graphics computers, live motion sampling, and smart software” (deGraf and Yilmaz 1999). When applying human-sourced motion to exaggerated cartoon forms, the human imagination is stimulated: “motion capture” escapes its negative association with droll, unimaginative literal recording. It inspires the human controller to think more like a puppeteer than an actor. Puppeteering is more out-of-body than acting. Anything can become a puppet (a sock, a salt shaker, a rubber hose).

deGraf and Yilmaz make this out-of-body transference possible by “re-proportioning” the data stream from the human controller to fit non-human anatomies.

deGraf and Yilmaz make this out-of-body transference possible by “re-proportioning” the data stream from the human controller to fit non-human anatomies.

Research by Nick Yee found that people’s behaviors change as a result of having different virtual representations, a phenomenon he calls the Proteus Effect (Yee 2007). Artist Micha Cardenas spent 365 hours continuously as a dragon in Second Life. She employed a Vicon motion capture system to translate her motions into the virtual world. This project, called “Becoming Dragon”, explored the limits of body modification, “challenging” the one-year transition requirement that transgender people face before gender confirmation surgery. Cardenas told me, “The dragon as a figure of the shapeshifter was important to me to help consider the idea of permanent transition, rejecting any simple conception of identity as tied to a single body at a single moment, and instead reflecting on the process of learning a new way of moving, walking, talking and how that breaks down any possible concept of an original or natural state for the body to be in” (Cardenas 2010).

With this performance, Cardenas wanted to explore not only the issues surrounding gender transformation, but the larger question of how we experience our bodies, and what it means to inhabit the body of another being—real or simulated. During this 365-hour art-performance, the lines between real and virtual progressively blurred for Cardenas, and she found herself “thinking” in the body of a dragon. What interests me is this: how did Micha’s brain adapt to be able to “think” in a different body? What happened to her body image?

With this performance, Cardenas wanted to explore not only the issues surrounding gender transformation, but the larger question of how we experience our bodies, and what it means to inhabit the body of another being—real or simulated. During this 365-hour art-performance, the lines between real and virtual progressively blurred for Cardenas, and she found herself “thinking” in the body of a dragon. What interests me is this: how did Micha’s brain adapt to be able to “think” in a different body? What happened to her body image?

The Homunculus

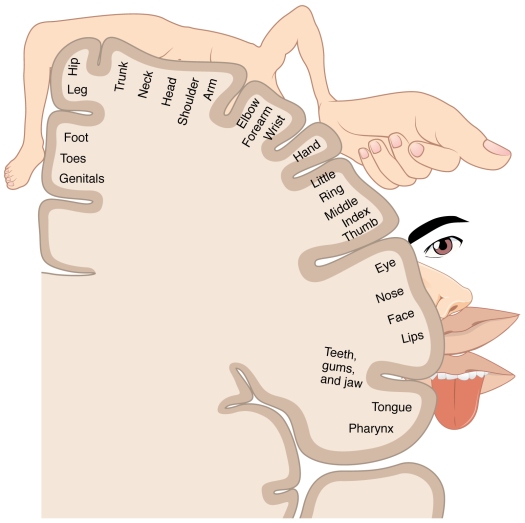

Dr. Wilder Penfield was operating on a patient’s brain to relieve symptoms of epilepsy. In the process he made a remarkable discovery: each part of the body is associated with a specific region in the brain. He had discovered the homunculus: a map of the body in the brain. The homunculus is an invisible cartoon character. It can only be “seen” by carefully probing parts of it and asking the patient what he or she is feeling, or by watching different parts of the body twitch in response. Most interesting is the fact that some parts of the homunculus are much larger than others—totally out of proportion with normal human anatomy. There are two primary “homunculi” (sensory and motor) and their distorted proportions correspond to the relative differences in how much of the brain is dedicated to the different regions.

For instance, eyes, lips, tongue, and hands are proportionately large, whereas the skull and thighs are proportionately small. I would not want to encounter a homunculus while walking down the street or hanging out at a party—homunculi are not pretty—in fact, I find them quite frightening. Indeed they have cropped up in haunting ways throughout the history of literature, science, and philosophy.

But happily, for purposes of my research, any homunculus I encounter would be very good at nonverbal communication, because eyes, mouth, and hands are major expressive organs of the body. As a general rule, the parts of our body that receive the most information are also the parts that give the most. What would a “concert pianist homunculus” look like? Huge fingers. How about a “soccer player homunculus”? Gargantuan legs and tiny arms.

The Homuncular Avatar

Avatar code etches embodiment into virtual space. A “communication homunculus” was sitting on my shoulder while I was working at There.com and arguing with some computer graphics guys about how to engineer the avatar. Chuck Clanton and I were both advocates for dedicating more polygons and procedural animation capability to the avatar’s hands and face. But polygons are graphics-intensive (at least they were back then), and procedural animation takes a lot of engineering work. Consider this: in order to properly animate two articulated avatar hands, you need at least twenty extra joints, on top of the approximately twenty joints used in a typical avatar animation skeleton. That’s nearly twice as many joints.

The argument for full hand articulation was as follows: like the proportions of cortex dedicated to these communication-intensive areas of the body, socially-oriented avatars should have ample resources dedicated to face and hands.

When the developers of Traveler were faced with the problem of how to squeeze the most out of the few polygons they could render on the screen at any given time, they decided to just make avatars as floating heads—because their world centered on vocal communication (telephone meets avatar). The developers of ActiveWorlds, which had a similar polygonal predicament, chose whole avatar bodies (and they were quite clunky by today’s standards).

When the developers of Traveler were faced with the problem of how to squeeze the most out of the few polygons they could render on the screen at any given time, they decided to just make avatars as floating heads—because their world centered on vocal communication (telephone meets avatar). The developers of ActiveWorlds, which had a similar polygonal predicament, chose whole avatar bodies (and they were quite clunky by today’s standards).

These kinds of choices determine where and how human expression will manifest.

Non-Human Avatars

Avatar body language does not have to be a direct prosthetic to our corporeal expression. It can extend beyond the human form; this theme has been a part of avatar lore since the very beginning. What are the implications for a non-human body language alphabet? It means that our body language alphabet can (and I would claim already has begun to) include semantic units, attributes, descriptors, and grammars that encompass a superset of human form and behavior.

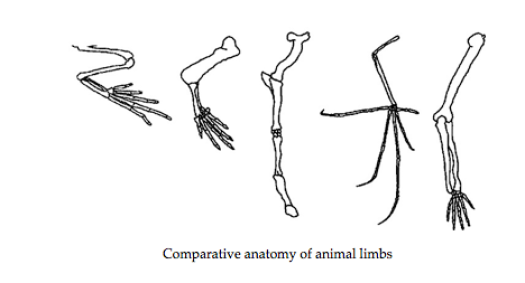

The illustration below shows pentadactl morphology of various vertebrate limbs. This has implications for a common underlying code used to express meta-human forms and motions.

A set of parameters (bone lengths, angle offsets, motor control attributes, etc.) can be specified, along with gross morphological settings (i.e., four limbs and a tail; six limbs and no head; no limbs and no head, etc.) Once these parameters are provided in the system to accommodate the various locomotion behaviors and forms of body language, they can be manipulated as genes, using interactive evolution interfaces or genetic algorithms, or simply tweaked directly in a customization interface.

But this morphological space of variation doesn’t need to stop at the vertebrates. We’ve seen avatars in the form of fishes, floating eyeballs, cartoon characters, abstract designs—you name it. Artists, in the spirit of performance artist Stelarc, are exploring the expressive possibilities of avatars and remote embodiment. Micha Cardenas, Max Moswitzer, Jeremy Owen Turner and others articulate the very aesthetics of embodiment and avatarhood—the entire possible expression-space. What are the possibilities of having a visual (or even aural) manifestation of yourself in an alternate reality? As I mentioned before, my Second Life avatar is a non-humanoid creature, consisting of a cube with tentacles.

On each side of the cube are fractals based on an image of my face. This cube floats in space where my head would normally be. Attached to the bottom of the cube are several green tentacles that hang like those of a jellyfish. This avatar was built by JoannaTrail Blazer, based on my design. In the illustration, Joanna’s avatar is shown next to mine.

I chose a non-human form for a few reasons: One reason is that I prefer to avoid the uncanny valley, and will go to extremes in avoiding droll realism, employing instead the visual tools of abstraction and symbolism. By not trying to replicate the image of a real human, I can sidestep the problem of my avatar “not looking like me” or “not looking right”. My non-human avatar also allows me to explore the realm of non-human body language. On the one hand, I can express yes and no by triggering animations that cause the cube to oscillate like a normal head. But I could also express happiness or excitement by triggering an animation that causes the tentacles to flair out and oscillate, or to roll sensually like an inverted bouquet of cat tails. Negative emotions could be represented by causing the tentacles to droop limply (reinforced by having the cube-head slump downward). While the cube-head mimics normal human head motions, the tentacles do not correspond to any body language that I am physically capable of generating. They could tap other sources, like animal movement, and basic concepts like energy and gravity, expanding my capacity for expression.

Virtual Dogs

I want to come back to the topic of canine body language now, but this time, I’d like to discuss some of the ways that sheer dogginess in the gestalt can be expressed in games and virtual worlds. The canine species has made many appearances throughout the history of animation research, games, and virtual worlds. Rob Fulop, of the ‘90s game company PF Magic, created a series of games based on characters made of spheres, including the game “Dogz”. Bruce Blumberg of the MIT Media Lab developed a virtual interactive dog AI, and is also an active dog trainer. Dogs are a great subject for doing AI—they are easier to simulate than humans, and they are highly emotional, interactive, and engaging.

I want to come back to the topic of canine body language now, but this time, I’d like to discuss some of the ways that sheer dogginess in the gestalt can be expressed in games and virtual worlds. The canine species has made many appearances throughout the history of animation research, games, and virtual worlds. Rob Fulop, of the ‘90s game company PF Magic, created a series of games based on characters made of spheres, including the game “Dogz”. Bruce Blumberg of the MIT Media Lab developed a virtual interactive dog AI, and is also an active dog trainer. Dogs are a great subject for doing AI—they are easier to simulate than humans, and they are highly emotional, interactive, and engaging.

While prototyping There.com, Will and I developed a dog that would chase a virtual Frisbee tossed by your avatar (involving simultaneous mouse motion to throw the Frisbee and hitting the space key to release the Frisbee at the right time). Since I was interested in the “essence of dog energy”, I decided not to focus on the graphical representation of the dog so much as the motion, sound, and overall behavior of the dog. So, for this prototype, the dog had no legs: just a sphere for a body and a sphere for a head (each rendered as toon-shaded circles—flat coloring with an outline). These spheres could move slightly relative to each other, so that the head had a little bounce to it when the dog jumped.

The eyes were rendered as two black cartoon dots, and they would disappear when the dog blinked. Two ears, a tail, and a tongue were programmed, because they are expressive components. The ears were animated using forward dynamics, so they flopped around when the dog moved, and hung downward slightly from the force of gravity. I programmed some simple logic to make an oscillating, “panting” force in the tongue which would increase in frequency and force when the dog was especially active, tired, or nervous. I also programmed “ear perkiness” which would cause the ears to aim upwards (still with a little bit of droop) whenever a nearby avatar produced a chat that included the dog’s name. I programmed the dog to change its mood when a nearby avatar produced the chats “good dog” or “bad dog”. And this was just the beginning. Later, I added more AI allowing the dog to learn to recognize chat words, and to bond to certain avatars.

Despite the lack of legs and its utterly crude rendering, this dog elicited remarkable puppy-like responses in the people who watched it or played with it. The implication from this is that what makes a dog a dog is not merely the way it looks, but the way it acts—its essence as a distinctly canine space-time energy event. Recall the exaggerated proportions of the human communication homunculus. For this dog, ears, tail, and tongue constituted the vast majority of computational processing. That was on purpose; this dog was intended as a communicator above all else. Consider the visual language of tail wagging that I brought up earlier, a visual language which humans (especially dog-owners) have incorporated into their unconscious vocabularies. What lessons might we take from the subject of wag semiotics, as applied to the art and science of wagging on the internet?

Tail Wagging on the Internet

In the There.com dog, the tail was used as a critical indicator of its mood at any given moment, raising when the dog was alert or aroused, drooping when sad or afraid, and wagging when happy. Take wagging as an example. The body language information packet for tail wagging consisted of a Boolean value sent from the dog’s Brain to the dog’s Animated Body, representing simply “start wagging” or “stop wagging”. It is important to note that the dog’s Animated Body is constituted on clients (computers sitting in front of users), whereas the dog’s Brain resides on servers (arrays of big honkin’ computers in a data center that manage the “shared reality” of all users). The reason for this mind/body separation is to make sure that body language messaging (as well as overall emotional state, physical location and orientation of the dog, and other aspects of its dynamic state) are truthfully conveyed to all the users.

Clients are subject to animation frame rates lagging, internet messages dropping out, and other issues. All users whose avatars are hanging out in a part of the virtual world where the dog is hanging out need to see the same behavior; they are all “seeing the same dog”—virtually-speaking.

It would be unnecessary (and expensive in terms of internet traffic) for the server to send individual wags several times a second to all the clients. Each client’s Animated Body code is perfectly capable of performing this repetitive animation. And, because of different rendering speeds among various clients, lag times, etc, the wagging tail on your client might be moving left-right-left-right, while the wagging tail on my client is moving right-left-right-left. In other words, they might be out of phase or wagging at slightly different speeds. These slight differences have almost no effect on the reading of the wag. “I am wagging my tail” is the point. That’s a Boolean message: one bit. The reason I am laboring over this point harkens back to the chapter on a Body Language Alphabet: a data-compressed message, easy to zip through the internet, is efficient for helping virtual worlds run smoothly. It is also inspired by biosemiotics: Mother Nature’s efficient message-passing protocol.

On Cuttlefish and Dolphins

The homunculus of Homo Sapiens might evolve into a more plastic form—maybe not on a genetic/species level, but at least during the lifetimes of individual brains, assisted by the scaffolding of culture and virtual world technology. This plasticity could approach strange proportions, even as our physical bodies remain roughly the same. As we spend more of our time socializing and interacting on the internet as part of the program to travel less to reduce greenhouse gases, our embodiment will naturally take on the forms appropriate to the virtual spaces we occupy. And these spaces will not necessarily mimic the spaces of the real world, nor will our embodiments always look and feel like our own bodies. And with these new virtual embodiments will come new layers of body language. Jaron Lanier uses the example of cephalopods as species capable of animated texturemapping and physical morphing used for communication and camouflage—feats that are outside the range of physical human expression.

In reference to avatars, Lanier says, “The problem is that in order to morph, humans must design avatars in laborious detail in advance. Our software tools are not yet flexible enough to enable us, in virtual reality, to think ourselves into different forms. Why would we want to? Consider the existing benefits of our ability to create sounds with our mouths. We can make new noises and mimic existing ones, spontaneously and instantaneously. But when it comes to visual communication, we are hamstrung…We can learn to draw and paint, or use computer-graphics design software. But we cannot generate images at the speed with which we can imagine them” (Lanier 2006).

Once we have developed the various non-humanoid puppeteering interfaces that would allow Lanier’s vision, we will begin to invent new visual languages for realtime communication. Lanier believes that in the future, humans will be truly “multihomuncular”.

Researchers from Aberdeen University and the Polytechnic University of Catalonia found that dolphins use discrete units of body language as they swim together near the surface of water. They observed efficiency in these signals, similar to what occurs in frequently-used words in human verbal language (Ferrer i Cancho and Lusseau 2009). As human natural language goes online, and as our body language gets processed, data-compressed, and alphabetized for efficient traversal over the internet, we may start to see more patterns of our embodied language that resemble those created by dolphins, and many other social species besides. The background communicative buzz of the biosphere may start to make more sense in the process of whittling our own communicative energy down to its essential features, and being able to analyze it digitally. With a universal body language alphabet, we might someday be able to animate our skin like cephalopods, or speak “dolphin”, using our tails, as we lope across the virtual waves.