Nick Bostrom is a philosopher who is known for his work on the dangers of AI in the future. Many other notable people, including Stephen Hawking, Elon Musk, and Bill Gates, have commented on the existential threats posed by a future AI. This is an important subject to discuss, but I believe that there are many careless assumptions being made as far as what AI actually is, and what it will become.

Nick Bostrom is a philosopher who is known for his work on the dangers of AI in the future. Many other notable people, including Stephen Hawking, Elon Musk, and Bill Gates, have commented on the existential threats posed by a future AI. This is an important subject to discuss, but I believe that there are many careless assumptions being made as far as what AI actually is, and what it will become.

Yea yea, there’s Terminator, Her, Ex Machinima, and so many other science fiction films that touch upon deep and relevant themes about our relationship with autonomous technology. Good stuff to think about (and entertaining). But AI is much more boring than what we see in the movies. AI can be found distributed in little bits and pieces in cars, mobile phones, social media sites, hospitals…just about anywhere that software can run and where people need some help making decisions or getting new ideas.

John McCarthy, who coined the term “Artificial Intelligence” in 1956, said something that is totally relevant today: “as soon as it works, no one calls it AI anymore.” Given how poorly-defined AI is – how the definition of it seems to morph so easily, it is curious how excited some people get about its existential dangers. Perhaps these people are afraid of AI precisely because they do not know what it is.

Elon Musk, who warns us of the dangers of AI, was asked the following question by Walter Isaacson: “Do you think you maybe read too much science fiction?” To which Musk replied:

Elon Musk, who warns us of the dangers of AI, was asked the following question by Walter Isaacson: “Do you think you maybe read too much science fiction?” To which Musk replied:

“Yes, that’s possible”….“Probably.”

Should We Be Terrified?

In an article with the very subtle title, “You Should Be Terrified of Superintelligent Machines“, Bostrom says this:

“An AI whose sole final goal is to count the grains of sand on Boracay would care instrumentally about its own survival in order to accomplish this.”

Point taken. If we built an intelligent machine to do that, we might get what we asked for. Fifty years later we might be telling it, “we were just kidding! It was a joke. Hahahah. Please stop now. Please?” It will push us out of the way and keep counting…and it just might kill us if we try to stop it.

Point taken. If we built an intelligent machine to do that, we might get what we asked for. Fifty years later we might be telling it, “we were just kidding! It was a joke. Hahahah. Please stop now. Please?” It will push us out of the way and keep counting…and it just might kill us if we try to stop it.

Part of Bostrom’s argument is that if we build machines to achieve goals in the future, then these machines will “want” to survive in order to achieve those goals.

“Want?”

Bostrom warns against anthropomorphizing AI. Amen! In a TED Talk, he even shows a picture of the typical scary AI robot – like so many that have been polluting the air waves of late. He discounts this as anthropomorphizing AI.

And yet Bostrom frequently refers to what an AI “wants” to do, the AI’s “preferences”, “goals”, even “values”. How can anyone be certain that an AI can have what we call “values” in any way that we can recognize as such? In other words, are we able to talk about “values” in any other context than a human one?

From my experience in developing AI-related code for the past 20 years, I can say this with some confidence: it is senseless to talk about software having anything like “values”. By the time something vaguely resembling “value” emerges in AI-driven technology, humans will be so intertwingled with it that they will not be able to separate themselves from it.

From my experience in developing AI-related code for the past 20 years, I can say this with some confidence: it is senseless to talk about software having anything like “values”. By the time something vaguely resembling “value” emerges in AI-driven technology, humans will be so intertwingled with it that they will not be able to separate themselves from it.

It will not be easy – or possible – to distinguish our values from “its” values. In fact, it is quite possible that we won’t refer to it at “it”. “It” will be “us”.

Bostrom’s fear sounds like fear of the Other.

That Disembodied Thing Again

Let’s step out of the ivory tower for a moment. I want to know how that AI machine on Boracay is going to actually go about counting grains of sand.

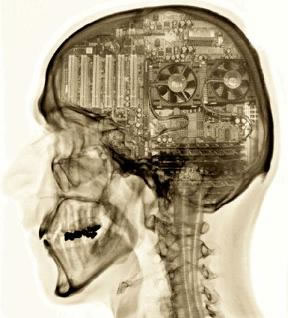

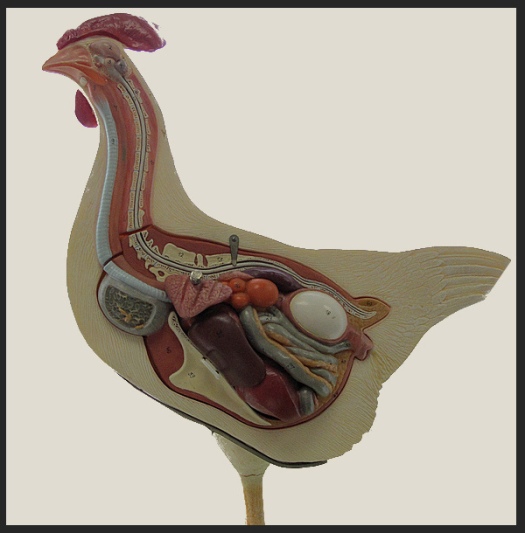

Many people who talk about AI refer to many amazing physical feats that an AI would supposedly be able to accomplish. But they often leave out the part about “how” this is done. We cannot separate the AI (running software) from the physical machinery that has an effect on the world – any more than we can talk about what a brain can do that has been taken out one’s head and placed on a table.

It can jiggle. That’s about it.

Once again, the Cartesian separation of mind and body rears its ugly head – as it were – and deludes people into thinking that they can talk about intelligence in the absence of a physical body. Intelligence doesn’t exist outside of its physical manifestation. Can’t happen. Never has happened. Never will happen.

Ray Kurzweil predicted that by 2023 a $1,000 laptop would have the computing power and storage capacity of a human brain. When put in these terms, it sounds quite plausible. But if you were to extrapolate that to make the assumption that a laptop in 2023 will be “intelligent” you would be making a mistake.

Many people who talk about AI make reference to computational speed and bandwidth. Kurzweil helped to popularize a trend for plotting computer performance along with with human intelligence, which perpetuates computationalism. Your brain doesn’t just run on electricity: synapse behavior is electrochemical. Your brain is soaking in chemicals provided by this thing called the bloodstream – and these chemicals have a lot to do with desire and value. And… surprise! Your body is soaking in these same chemicals.

Intelligence resides in the bodymind. Always has, always will.

So, when there’s lot of talk about AI and hardly any mention of the physical technology that actually does something, you should be skeptical.

Bostrom asks: when will we have achieved human-level machine intelligence? And he defines this as the ability “to perform almost any job at least as well as a human”.

I wonder if his list of jobs includes this:

Intelligence is Multi-Multi-Multi-Dimensional

Bostrom plots a one-dimensional line which includes a mouse, a chimp, a stupid human, and a smart human. And he considers how AI is traveling along this line, and how it will fly past humans.

Intelligence is not one dimensional. It’s already a bit of a simplification to plot mice and chimps on the same line – as if there were some single number that you could extract from each and compute which is greater.

Charles Darwin once said: “It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is most adaptable to change.”

Is a bat smarter than a mouse? Bats are blind (dumber?) but their sense of echolocation is miraculous (smarter?)

Is an autistic savant who can compose complicated algorithms but can’t hold a conversation smarter than a charismatic but dyslexic soccer coach who inspires kids to be their best? Intelligence is not one-dimensional, and this is ESPECIALLY true when comparing AI to humans. Plotting them both on a single one-dimensional line is not just an oversimplification. By plotting AI on the same line as human intelligence, Bostrom is committing anthropomorphism.

AI cannot be compared apples-to-apples to human intelligence because it emerges from human intelligence. Emergent phenomena by their nature operate on a different plane than what they emerge from.

WE HAVE ONLY OURSELVES TO FEAR BECAUSE WE ARE INSEPARABLE FROM OUR AI

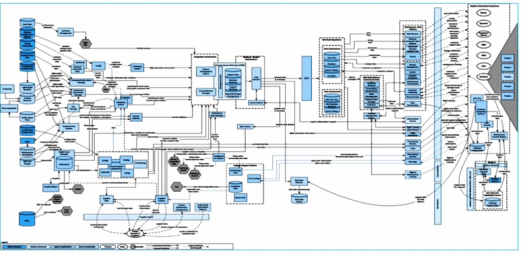

We and our AI grow together, side by side. AI evolves with us, for us, in us. It will change us as much as we change it. This is the posthuman condition. You probably have a smart phone (you might even be reading this article on it). Can you imagine what life was like before the internet? For half of my life, there was no internet, and yet I can’t imagine not having the internet as a part of my brain. And I mean that literally. If you think this is far-reaching, just wait another 5 years. Our reliance on the internet, self-driving cars, automated this, automated that, will increase beyond our imaginations.

Posthumanism is pulling us into the future. That train has left the station.

But…all these technologies that are so folded-in to our daily lives are primarily about enhancing our own abilities. They are not about becoming conscious or having “values”. For the most part, the AI that is growing around us is highly-distributed, and highly-integrated with our activities – OUR values.

But…all these technologies that are so folded-in to our daily lives are primarily about enhancing our own abilities. They are not about becoming conscious or having “values”. For the most part, the AI that is growing around us is highly-distributed, and highly-integrated with our activities – OUR values.

I predict that Siri will not turn into a conscious being with morals, emotions, and selfish ambitions…although others are not quite so sure. Okay – I take it back; Siri might have a bit of a bias towards Apple, Inc. Ya think?

Giant Killer Robots

There is one important caveat to my argument. Even though I believe that the future of AI will not be characterized by a frightening army of robots with agendas, we could potentially face a real threat: if military robots that are ordered to kill and destroy – and use AI and sophisticated sensor fusion to outsmart their foes – were to get out of hand, then things could get ugly.

There is one important caveat to my argument. Even though I believe that the future of AI will not be characterized by a frightening army of robots with agendas, we could potentially face a real threat: if military robots that are ordered to kill and destroy – and use AI and sophisticated sensor fusion to outsmart their foes – were to get out of hand, then things could get ugly.

But with the exception of weapon-based AI that is housed in autonomous mobile robots, the future of AI will be mostly custodial, highly distributed, and integrated with our own lives; our clothes, houses, cars, and communications. We will not be able to separate it from ourselves – increasingly over time. We won’t see it as “other” – we might just see ourselves as having more abilities than we did before.

Those abilities could include a better capacity to kill each other, but also a better capacity to compose music, build sustainable cities, educate kids, and nurture the environment.

If my interpretation is correct, then Bolstrom’s alarm bells might be better aimed at ourselves. And in that case, what’s new? We have always had the capacity to create love and beauty … and death and destruction.

To quote David Byrne: “Same as it ever was”.

Maybe Our AI Will Evolve to Protect Us And the Planet

Here’s a more positive future to contemplate:

AI will not become more human-like – which is analogous to how the body of an animal does not look like the cells that it is made of.

Billions of years ago, single cells decided to come together in order to make bodies, so they could do more using teamwork. Some of these cells were probably worried about the bodies “taking over”. And oh did they! But, these bodies also did their little cells a favor: they kept them alive and provided them with nutrition. Win-win baby!

Billions of years ago, single cells decided to come together in order to make bodies, so they could do more using teamwork. Some of these cells were probably worried about the bodies “taking over”. And oh did they! But, these bodies also did their little cells a favor: they kept them alive and provided them with nutrition. Win-win baby!

To conclude, I disagree with Bostrom: we should not be terrified.

Terror is counter-productive to human progress.

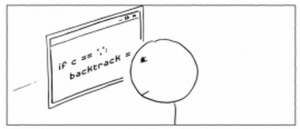

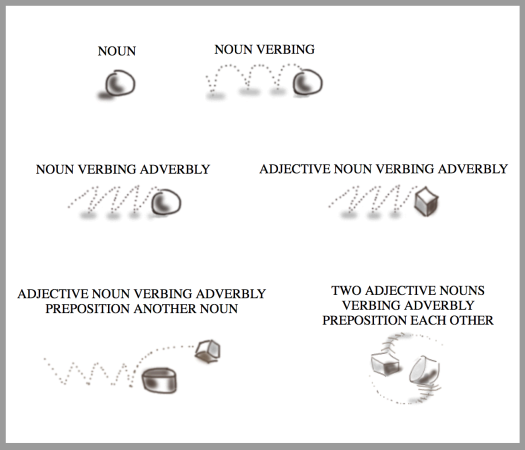

We can only hold so many variables in our minds at once. I have heard figures like “about 7”. But of course, this begs the question of what a “thing” is. Let’s just say that there are only so many threads of a conversation, only so many computer variables, only so many aspects to a system that can be held in the mind at once. It’s like juggling.

We can only hold so many variables in our minds at once. I have heard figures like “about 7”. But of course, this begs the question of what a “thing” is. Let’s just say that there are only so many threads of a conversation, only so many computer variables, only so many aspects to a system that can be held in the mind at once. It’s like juggling.

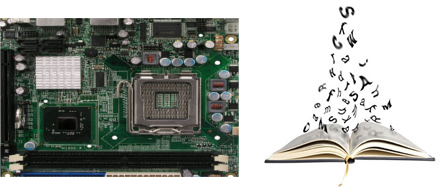

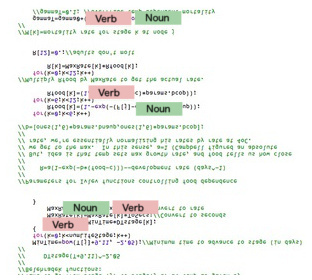

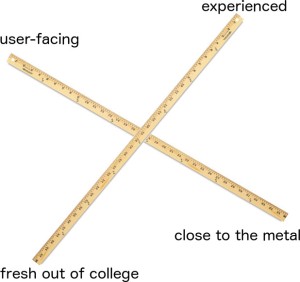

Graduating to C++ made a positive difference. Object-oriented programming affords ways to encapsulate the aspects of the code that are close to the metal, allowing one to ascend to higher levels of abstraction, and express the things that really matter (I realize many programmers would take issue with this – claiming that hardware matters a lot).

Graduating to C++ made a positive difference. Object-oriented programming affords ways to encapsulate the aspects of the code that are close to the metal, allowing one to ascend to higher levels of abstraction, and express the things that really matter (I realize many programmers would take issue with this – claiming that hardware matters a lot).

I don’t know about you, but as a developer, I really hate it when a software project gets pulled apart, with large components being rendered inoperable, while key parts are re-written. Would a surgeon kill a patient in order to do a kidney transplant?

I don’t know about you, but as a developer, I really hate it when a software project gets pulled apart, with large components being rendered inoperable, while key parts are re-written. Would a surgeon kill a patient in order to do a kidney transplant?

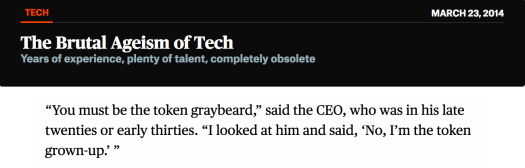

I’ve worked in many high-tech startup companies in the San Francisco Bay area. I am now 52, and I program slowly and thoughtfully. I’m kind of like a designer who writes code; this may become apparent as you read on :)

I’ve worked in many high-tech startup companies in the San Francisco Bay area. I am now 52, and I program slowly and thoughtfully. I’m kind of like a designer who writes code; this may become apparent as you read on :)